TrueDepth machine learning is rapidly becoming one of the most influential technologies shaping the evolution of modern mobile applications. As artificial intelligence continues to gain mainstream traction, companies are increasingly integrating advanced AI capabilities into consumer-facing and enterprise mobile solutions.

The State of Mobile 2025 report highlights this shift by revealing that AI chatbot and AI art generator applications were downloaded almost 1.5 billion times in 2024, generating 1.27 billion USD in in-app purchase revenue. These numbers confirm that AI-powered mobile experiences are not only popular but also commercially viable, encouraging organizations to explore new opportunities made possible by the intersection of machine learning and specialized hardware such as the TrueDepth camera system.

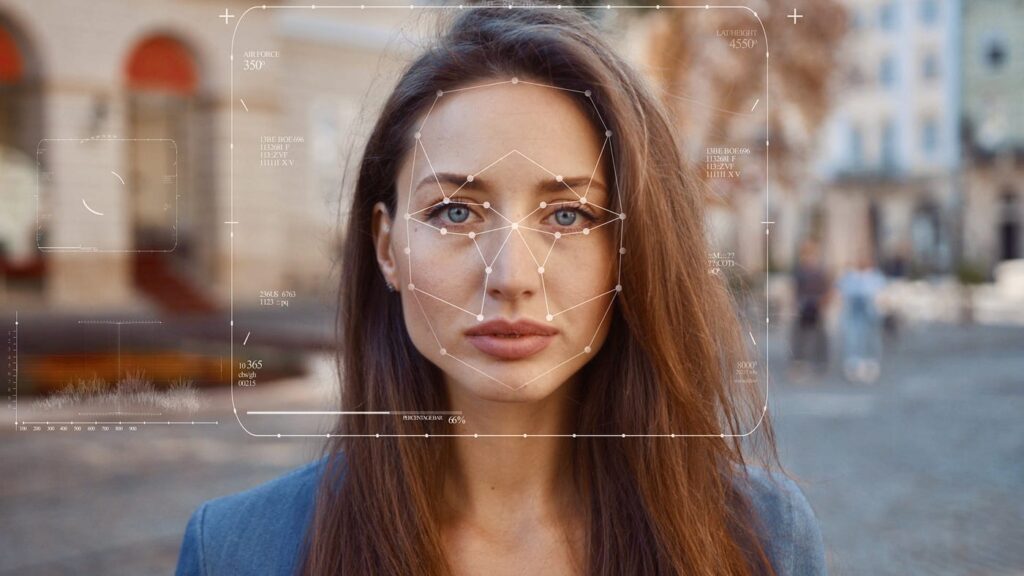

Within the iOS ecosystem, no other hardware component enables more sophisticated facial recognition and motion interpretation than TrueDepth. When paired with machine learning, TrueDepth provides mobile developers with a foundation for creating expressive, intuitive and gesture-driven user experiences.

This article explores the fundamentals of TrueDepth, explains how machine learning is applied within mobile systems, details the tools available to iOS developers, and examines practical implementation models that demonstrate how TrueDepth machine learning can unlock new possibilities in mobile applications. By the end, you will have a complete understanding of how TrueDepth machine learning works and how companies partner with engineering experts like Progressive Robot to build advanced AI-driven mobile applications.

Understanding the TrueDepth Camera System Through a Machine Learning Lens

TrueDepth machine learning begins with understanding the hardware responsible for Apple’s advanced facial recognition capabilities. The TrueDepth camera system powers Face ID, which has become a standard authentication method on most iPhones released since the iPhone X, excluding earlier SE models. Any iPhone with a notch or Dynamic Island uses TrueDepth to map and interpret facial geometry.

The system relies on three specialized components.

The first is a dot projector that emits thousands of infrared dots onto the user’s face to create a precise depth map. The second component is the flood illuminator, which ensures that the infrared dots remain visible even in low-light environments, allowing the system to function regardless of lighting conditions. Finally, the infrared camera captures the dot pattern and transmits the resulting image to a dedicated processor, which interprets the data to construct an accurate 3D representation of the user’s facial features.

When a user configures Face ID, TrueDepth creates a baseline dataset of their facial structure. Each time Face ID is used, the system captures new images, compares them with prior data, and applies machine learning algorithms to adapt to natural changes in appearance. This ongoing training process improves accuracy and reduces false rejections.

The security level of TrueDepth-based authentication surpasses fingerprint-based systems, with the probability of a random user unlocking a device with Face ID being less than one in a million. This probability is slightly higher when the user has an identical twin or is a young child, but overall, TrueDepth remains one of the most secure biometric systems used in consumer devices.

How Machine Learning Shapes Modern Mobile Systems

TrueDepth machine learning is part of a broader trend in which mobile systems rely heavily on ML to enhance functionality, personalize user interactions and accelerate on-device processing. Machine learning in smartphones is not limited to facial recognition. Predictive text functions analyze typing patterns to suggest appropriate words.

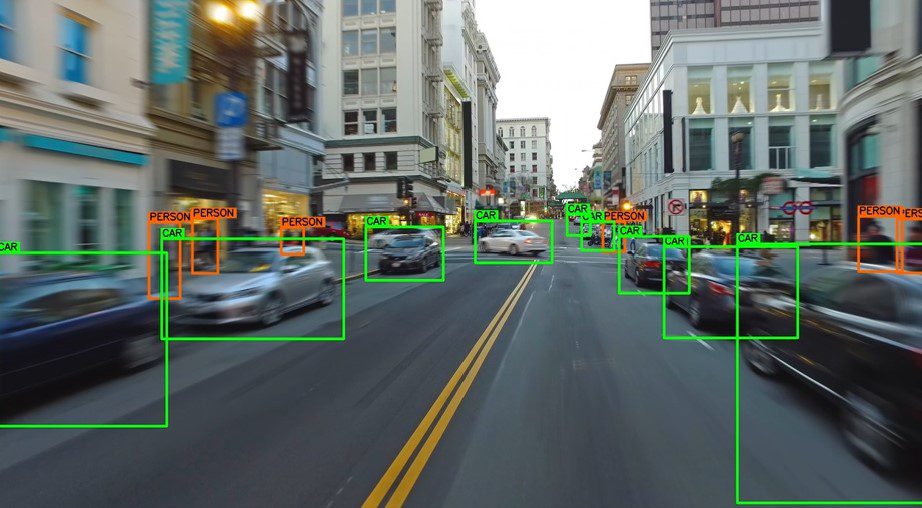

Camera systems use ML-based scene interpretation to differentiate objects, improve exposure and apply depth effects. Voice assistants analyze user speech and respond to commands. Environmental sound recognition identifies important noises such as alarms, doorbells or crying infants.

In mobile development, machine learning refers to models trained on large datasets to detect patterns, classify inputs or generate predictions. Complex machine learning systems often require advanced expertise, but Apple has created tools that allow developers with varying levels of ML knowledge to integrate machine learning into apps.

Pre-trained models are available for common functions such as image recognition, text classification and object detection. Developers can integrate these models directly into their applications without the need for extensive data science experience. For more advanced use cases, Apple enables developers to train custom models using their own datasets, tailoring ML capabilities to the exact requirements of a mobile solution.

In the iOS ecosystem, the Neural Engine accelerates machine learning operations by offloading these tasks to specialized hardware. As a result, ML-driven features run faster, consume less power and operate entirely on the device, improving privacy and performance.

TrueDepth Machine Learning Integration Through Core ML

The foundation of TrueDepth machine learning in iOS development is Core ML, Apple’s comprehensive machine learning framework. Core ML allows developers to integrate machine learning models directly into mobile applications. It supports both pre-trained models and custom models created through Apple’s development tools. With deep integration into Xcode, Core ML provides developers with live previews, measurements of model performance and optimization features that help reduce memory usage and power consumption.

Because Core ML processes data on the device, applications benefit from faster responses and stronger privacy protections. This makes the combination of Core ML and TrueDepth particularly powerful, as both technologies prioritize real-time processing and secure data handling.

Machine learning models used with TrueDepth often rely on ARKit, Apple’s augmented reality framework. ARKit enables developers to access real-time facial geometry data generated by the TrueDepth system. Through ARKit, developers can track expressions, movements and subtle muscle changes without writing machine learning logic from scratch.

The following sections examine two practical examples of TrueDepth machine learning implementation. Instead of showing code, these examples describe the logic, structure and workflow in narrative form.

TrueDepth Machine Learning Example: Using a Pre-Trained Facial Expression Model

In the first example, a developer creates an iOS application that allows users to interact with the interface through gestures such as winking or blinking. The application uses ARKit to track facial movements captured by the TrueDepth camera and processes them through pre-defined expression categories without requiring a custom-trained model.

The application begins by establishing the TrueDepth environment. ARKit projects a mesh overlay onto the user’s face, representing the face geometry detected by the camera system. As the user moves, ARKit updates this mesh in real time. Within the delegate method responsible for these updates, the application retrieves the current configuration of facial features, known as blend shape coefficients.

These coefficients indicate the degree to which specific muscles are activated. For example, a blinking motion corresponds to an increased coefficient for the left or right eye blink category. Once the application retrieves the relevant blend shapes, it interprets the values. If the value for the left eye surpasses a predefined threshold, such as 0.5, the system categorizes the motion as a left blink. The same logic applies to the right eye.

The model then sends the determined expression to the user interface, where a label updates to reflect the detected movement. This creates a responsive system in which facial expressions control navigation or trigger actions. Because ARKit already provides a reliable library of supported expressions, developers can implement expressive controls without training any machine learning models.

TrueDepth Machine Learning Example: Using a Custom Core ML Model

The second example highlights an application that relies on a custom-trained Core ML model. The goal of this feature is to determine whether a user’s mouth is open or closed based on TrueDepth-generated imagery.

In this scenario, the mobile application periodically captures snapshots of the user’s facial geometry. Unlike the first example, where the system uses predefined expressions, this version feeds each captured image into a custom classifier created using Create ML. The model is trained on 800 labeled images, equally split between open-mouth and closed-mouth categories.

The images consist of TrueDepth masks filled with a solid rendering mode, which ensures consistent representation across all training samples. The uniformity of the dataset significantly improves model accuracy without requiring data from many different people.

After training, Create ML allows developers to validate the model by uploading a set of test images and reviewing the classification results. Once accuracy meets the expected threshold, the developer exports the model and includes it in the iOS application.

Inside the application, each new snapshot is converted into a format suitable for machine learning inference. A request handler processes the image using the Core ML model, generating a classification result. To maintain reliability, the system only accepts a prediction if the model expresses confidence exceeding 80 percent. If the model determines that the user’s mouth is open, the interface reacts accordingly, and the same occurs when the mouth is classified as closed.

This demonstrates how TrueDepth machine learning enables developers to move beyond predefined expression tracking and build custom interaction models tailored to specific use cases.

The Growing Importance of AI-Driven Mobile Development

TrueDepth machine learning represents only one segment of a larger movement in which mobile applications merge hardware capabilities with intelligent algorithms. Companies are increasingly using machine learning to enhance user experiences, automate tasks, personalize content and build new forms of user engagement. As Apple continues to expand its machine learning tools, iOS developers gain access to frameworks that simplify the deployment of ML features while maintaining performance and privacy standards.

However, implementing more advanced applications that rely on custom machine learning models, large datasets or real-time processing pipelines requires specialized expertise. For organizations without in-house AI engineering capabilities, partnering with experienced development firms becomes essential.

Progressive Robot supports companies seeking to build AI-powered mobile applications by offering end-to-end development services, model training expertise and mobile engineering proficiency. With a strong background in designing intelligent mobile systems, Progressive Robot helps organizations bring sophisticated machine learning concepts to life within iOS ecosystems and other platforms.

Advancing Mobile Innovation Through TrueDepth Machine Learning

TrueDepth machine learning presents an opportunity for mobile developers to design applications with expressive and intuitive interactions. By combining the precision of the TrueDepth camera system with machine learning models, mobile apps can interpret facial expressions, gestures and subtle movements in real time. Apple’s Core ML, Create ML and ARKit frameworks empower developers to integrate these capabilities with relative ease, whether through pre-trained models or custom solutions.

As AI-driven mobile experiences continue to grow in popularity, organizations increasingly look for ways to differentiate their applications. TrueDepth machine learning offers a compelling path forward, enabling richer interactions, improved accessibility and more engaging user experiences.

Companies seeking to integrate advanced ML capabilities into their iOS solutions can benefit significantly from collaborating with specialized engineering partners like Progressive Robot, whose expertise in mobile development and artificial intelligence can accelerate innovation and ensure high-quality outcomes.

If you want to learn how Progressive Robot can support your machine learning and mobile development needs, you can reach out directly through our contact form and explore how TrueDepth machine learning can transform your next iOS application.